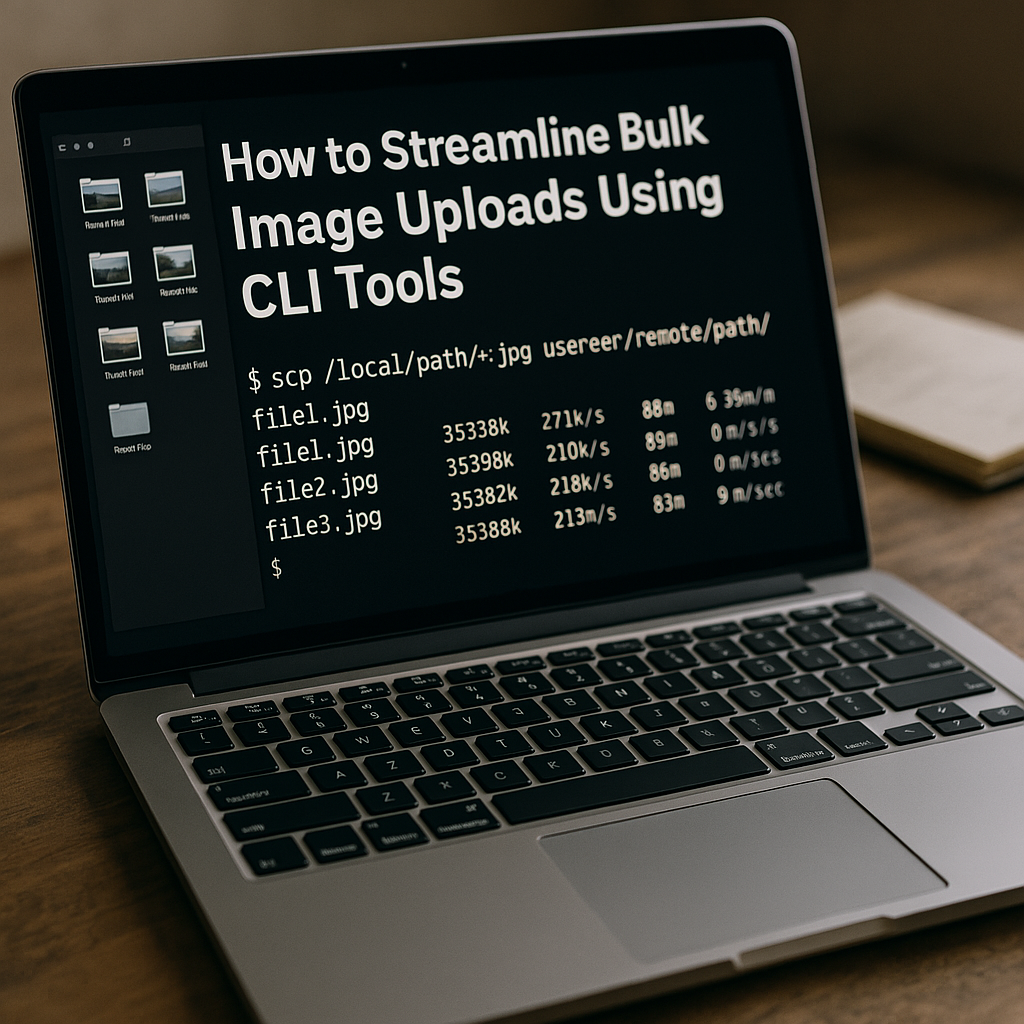

How to Streamline Bulk Image Uploads Using CLI Tools

Managing images is integral to modern web development and content creation. Whether you’re running an e-commerce platform that needs to upload thousands of product photos or a personal blog with high-resolution images, streamlining your bulk image upload process can save time, reduce errors, and improve overall efficiency. While some developers and content managers still rely on graphical user interfaces (GUIs) for uploading files, command-line interface (CLI) tools offer a more automated, script-friendly environment ideal for high-volume workflows.

This in-depth guide walks you through:

- Why bulk image uploading matters for performance and productivity.

- A rundown of popular CLI tools like

cURL,Wget,scp,rsync,AWS CLI, and more. - Best practices for directory structures, file naming, and handling large volumes of images.

- Examples and scripts for automating uploads to different destinations, including remote servers and object storage platforms.

- Strategies for logging, monitoring, and avoiding common pitfalls.

By the end, you’ll be fully equipped to streamline and optimize your bulk image uploads—and have a framework you can easily expand upon as your needs grow.

1. The Case for Bulk Image Upload via CLI

When dealing with a few images here and there, a basic drag-and-drop UI might suffice. However, as soon as you’re handling dozens, hundreds, or even tens of thousands of images, the limitations of graphical interfaces become clear:

- Lack of Automation: GUI-based tools often require manual action for each upload.

- Limited Logging: It’s easier to lose track of errors or partial uploads without a command history or logs.

- Low Efficiency: GUIs might struggle with concurrency; if the tool crashes, you may have to reinitiate the entire sequence.

By contrast, CLI tools allow you to:

- Automate tasks via shell scripts or cron jobs.

- Verify uploads through logs, exit codes, or verbose settings.

- Achieve parallel or concurrent uploads through built-in or third-party utilities.

- Integrate seamlessly with broader DevOps pipelines and CI/CD workflows.

In short, CLI approaches let you handle large-scale image uploads reliably, efficiently, and in a highly customizable way.

2. Understanding the Command Line for Image Management

If you’re new to the command line, here are some foundational concepts:

- Shell Environment: You might be using Bash, Zsh, or PowerShell. Each shell has unique syntax quirks, but the bulk-upload principles remain similar.

- File Paths: Ensure you understand absolute (

/home/user/images/...) vs. relative paths (./images/...). - Permissions: Many upload failures stem from permission issues. Know how to set correct ownership and file permissions, especially in Linux or macOS environments.

- Exit Codes: CLI tools typically return a code indicating success or error. Leveraging exit codes in scripts is essential for robust error handling.

Master these basics to confidently navigate and troubleshoot any CLI-based workflow.

3. Key CLI Tools for Bulk Image Uploads

Here is a quick overview of popular CLI utilities that can streamline bulk image uploads:

3.1 cURL

- Usage: Often used for transferring data to/from servers via various protocols (HTTP, HTTPS, FTP).

- Strengths: Highly configurable, supports SSL/TLS, widely available across platforms.

Typical Command:

curl -F 'file=@/path/to/image.jpg' https://example.com/upload

3.2 Wget

- Usage: Primarily designed for retrieving content, but can also handle uploads with certain flags or use-cases.

- Strengths: Great for mirroring entire directories from remote servers.

Typical Command:

wget --method=PUT --body-file=image.jpg https://example.com/upload

3.3 scp (Secure Copy)

- Usage: Copies files securely between local/remote systems using SSH.

- Strengths: Easy for direct server-to-server or local-to-server uploads; secure encryption.

Typical Command:

scp /local/path/*.jpg user@server:/remote/path

3.4 rsync

- Usage: Synchronizes files between two locations, often used for incremental backups.

- Strengths: Efficient for large volumes, only transfers differences. Also encrypts data via SSH.

Typical Command:

rsync -avz /local/images/ user@server:/remote/images/

3.5 AWS CLI (S3)

- Usage: Manages AWS services directly via the CLI, especially useful for Amazon S3 storage.

- Strengths: Seamless integration with AWS ecosystem for large-scale and distributed storage.

Typical Command:

aws s3 cp /local/path/ s3://my-bucket/ --recursive

3.6 rclone

- Usage: Syncs files to and from various cloud storage solutions (Google Drive, Dropbox, etc.).

- Strengths: Supports multiple remote providers; robust encryption options.

Typical Command:

rclone copy /local/images remote:images

Understanding these tools provides a solid footing to choose the best fit for your operational needs and scale.

4. Setting Up Your Environment and Workspace

Before you start uploading any images, it’s beneficial to standardize your development environment:

- Naming Conventions: Use consistent file names that are easily searchable (e.g.,

product001.jpg,blog_banner_2023.png). Avoid spaces and special characters where possible. - Use Version Control: If relevant, store scripts and metadata files in Git or another VCS to track changes in your upload process.

- Credentials Management: Keep authentication details (API keys, SSH keys) in a secure file or password manager. Tools like

ssh-agentor.envfiles can help.

Directory Organization: Maintain a clear folder hierarchy, for example:

images/

├── product_photos/

├── blog_assets/

├── user_avatars/

└── thumbnails/

A well-organized environment reduces confusion and fosters smoother execution when you’re dealing with large sets of images.

5. Workflow Best Practices

5.1 Prepare Images Ahead of Time

Ensure you compress or resize images if needed. This step can significantly reduce upload time and storage usage.

5.2 Check Integrity

Use checksums like MD5 or SHA-256 to verify file integrity before and after uploads. This ensures no corruption occurred during transit.

5.3 Batch and Grouping

Group your images logically (e.g., by category, date, or user) to parallelize certain operations and keep your workflow tidy.

5.4 Use Verbose or Logging Modes

Many CLI tools have verbose options (-v, --verbose) that output detailed logs. This helps in debugging and record-keeping.

5.5 Monitor Network and Server Load

Bulk uploads can strain your network and remote servers. Monitor load to ensure you’re not saturating resources or violating rate limits.

6. Practical Examples: Step-by-Step CLI Uploads

This section explores real-world commands for bulk uploading images with some of the tools covered above.

6.1 Using cURL for a Form-Based Upload

Let’s say you have a web service (e.g., a CMS) that accepts images via POST:

# Example directory: /home/user/images/*.jpg

for img in /home/user/images/*.jpg

do

echo \"Uploading $img...\"

curl -F \"file=@${img}\" https://example.com/api/upload

done

- Explanation: The

-Foption simulates form data submission. - Advantages: Straightforward approach for websites with multi-part form endpoints.

- Potential Challenge: Might be slower if you have thousands of images; consider parallelization.

6.2 Using scp for Server-to-Server Transfers

If you have SSH access to a remote server:

scp /home/user/images/*.jpg user@remotehost:/var/www/images/

- Explanation:

scpmakes secure, encrypted connections. - Advantages: Quick and easy for direct remote folder uploads.

- Potential Challenge: Lacks advanced sync features if partial uploads fail.

6.3 Using rsync for Synchronization

With incremental or repeated uploads, rsync is powerful:

rsync -avz /home/user/images/ user@remotehost:/var/www/images/

- Explanation:

-apreserves attributes,-vis verbose, and-zcompresses data during transfer. - Advantages: Only differences or new files are transferred, saving time.

- Potential Challenge: Requires proper usage of trailing slashes. Without them, you might create nested folders incorrectly.

6.4 Using AWS CLI (S3) for Cloud Storage

For Amazon S3:

aws s3 cp /home/user/images/ s3://my-bucket/images/ --recursive

- Explanation:

--recursiveensures every file/subfolder is uploaded. - Advantages: Integrates natively with AWS.

- Potential Challenge: Learning AWS authentication and bucket policies.

6.5 Using rclone for Multi-Cloud Support

If you want to sync images to Google Drive, Dropbox, or another cloud:

rclone copy /home/user/images remote:my-folder

- Explanation:

rclonerequires initial configuration viarclone config. - Advantages: Supports numerous providers and encryption.

- Potential Challenge: Must handle each provider’s API limits and credential quirks.

Each of these approaches can form the backbone of a robust bulk image upload process.

7. Automation and Scripting Techniques

Automation is central to maximizing the efficiency of CLI-based workflows. Here are a few common techniques:

- Shell Scripts

- Encapsulate all upload commands in a

.shor.batfile. - Integrate environment checks, error handling, logging, and concurrency.

- Encapsulate all upload commands in a

- Task Scheduling

- Cron (Linux/macOS):

crontab -eto schedule periodic tasks (e.g., nightly uploads). - Task Scheduler (Windows): Automate .bat scripts to run at specified intervals.

- Cron (Linux/macOS):

- CI/CD Integration

- Tools like Jenkins, GitHub Actions, or GitLab CI can run your scripts automatically on push events or schedule.

- Great for combining code deployment with asset uploads for a frictionless workflow.

These strategies reduce manual work, minimize human errors, and help maintain consistent, predictable upload processes.

8. Performance Tuning and Parallel Uploads

Large-scale uploads can become time-consuming if handled sequentially. A few ways to speed things up:

- Parallelization: Use utilities like

GNU Parallel, or certain CLI tool flags (e.g.,--parallelinaws s3commands). - Connection Reuse: Repeatedly establishing connections to remote servers can slow uploads. Some tools support persistent connections to reduce overhead.

- Compression: Use compressed image formats (e.g.,

.jpgor.webp) to reduce file sizes before uploading. Tools likersync -zalso compress data in transit. - Resource Limits: Monitor CPU, memory, and network usage. Over-parallelizing can cause diminishing returns or server timeouts.

- CDN Integration: If your end goal is global distribution, uploading directly to a Content Delivery Network can drastically reduce latency for end-users, although it might require additional steps or specialized CLI commands.

9. Security Considerations

Security is paramount, particularly when handling sensitive or proprietary images.

- Encryption: Use tools that automatically encrypt data in transit (

scp,rsyncover SSH,HTTPSfor cURL). For at-rest encryption, rely on server-side encryption (e.g., S3 SSE) or full-disk encryption locally. - Authentication: Protect SSH keys (

chmod 600), use Multi-Factor Authentication if supported by your cloud provider, and rotate credentials periodically. - Access Control: Enforce the principle of least privilege—only allow upload access to essential directories or S3 buckets.

A secure setup guards against unauthorized access and data breaches, maintaining trust and compliance.

10. Error Handling, Logging, and Monitoring

When you’re pushing thousands of images at once, errors are inevitable—but they don’t have to be unmanageable.

- Exit Codes: Many tools return non-zero exit codes upon failure. Script logic can use

if [ $? -ne 0 ]; then ... fito catch these errors. - Monitoring Tools: Tools like Nagios, Zabbix, or cloud-native logging solutions can alert you to abnormal conditions like high error rates or slow transfer speeds.

- Retries and Resume: Some utilities, like

rsync, naturally pick up from where they left off if a connection breaks. Others (e.g.,curl) might require manual logic with a loop to retry uploads.

Verbose Logs: The -v or --verbose flags in commands like rsync or curl produce detailed logs you can redirect to files.

rsync -avz /local/images user@host:/remote/images 2>&1 | tee upload_log.txt

Incorporating robust error handling ensures minimal downtime and maximum data integrity.

11. Common Pitfalls and Troubleshooting

Below are frequent issues you might encounter when bulk uploading images, along with suggested solutions:

- File Permissions: If files aren’t owned by the same user who’s running the script, uploads can fail. Solution: use

chownorchmodto update permissions before uploading. - Path Typos: A single path mistake in your script can skip entire directories. Double-check with

lsor tab-completion prior to running bulk operations. - Server Configuration Limits: Some servers limit the max upload size or connections. Adjust server configs (like

nginx.confor Apache’s.htaccess) accordingly. - Network Bottlenecks: If you’re on a shared or metered connection, large uploads might saturate bandwidth. Consider scheduling them for off-peak hours or limiting upload speed using flags like

--limit-ratein cURL.

12. Real-World Use Cases

E-Commerce Platforms

Bulk uploading thousands of product photos or user-generated reviews can be routine. Automation ensures minimal downtime during updates or seasonal spikes (e.g., holiday promotions).

Educational Portals

Universities or online course providers often need to upload lecture thumbnails, slides, or reference images in large batches—CLI scripts help maintain consistency across multiple faculties or departments.

Media Companies

News outlets or content marketing agencies handle high-resolution image libraries. Secure, robust CLI workflows can reduce editorial overhead, letting journalists and contributors focus on content rather than manual uploads.

13. Extensibility and Future Trends

The CLI approach is highly extensible. As technology evolves, you can update your scripts to adapt to new:

- Storage Solutions: Switch from an on-premise server to cloud-based object storage with minimal changes to your CLI parameters.

- File Formats: WebP and AVIF are increasingly popular image formats. CLI tools generally require no major reconfiguration to handle them.

- CI/CD Tools: Evolving DevOps pipelines may incorporate container-based builds; you can embed your scripts in Docker containers for portable environments.

- AI and ML Integration: Future scripts might auto-generate alt text or run image quality checks before uploading using machine learning libraries integrated with your CLI processes.

By modularizing your approach—separating distinct concerns like image processing, uploading, and logging—your workflow remains easy to upgrade as new best practices emerge.

14. Conclusion

Bulk image upload does not need to be a manual, error-prone process. With command-line tools like cURL, rsync, scp, and cloud-specific utilities such as aws s3 or rclone, you can develop automated, scalable, and secure pipelines. Whether you’re managing a global e-commerce empire, powering an educational portal, or orchestrating a high-volume media site, adopting CLI-based workflows is one of the most efficient ways to handle large-scale uploads.

Key takeaways:

- Organize your directory structure and naming conventions before uploading.

- Choose the right CLI tool for your environment (SCP for secure server transfers, rsync for incremental syncing, AWS CLI for cloud scenarios, etc.).

- Leverage parallelization and automation to reduce time and manual effort.

- Maintain security with encryption, secure credentials, and minimal access.

- Implement robust error handling, verbose logging, and monitoring to ensure a smooth process.

- Keep your setup modular to adapt to new formats, platforms, or evolving best practices.

By following these guidelines, you can significantly streamline your bulk image upload process, reduce manual tasks, and prepare your workflow for future expansion. Now that you have a thorough roadmap, it’s time to put these strategies into action. Grab your favorite CLI tool, craft a script, and begin your journey toward more efficient, automated uploads. Enjoy the improved performance, reliability, and peace of mind!

Looking for more tips? Stay tuned for upcoming blog posts on continuous integration strategies for bulk uploads, in-depth tutorials on image transformations, and advanced use cases for multi-cloud deployments. Feel free to reach out or leave a comment with any questions or experiences you’d like to share. Here’s to faster, more reliable image management!